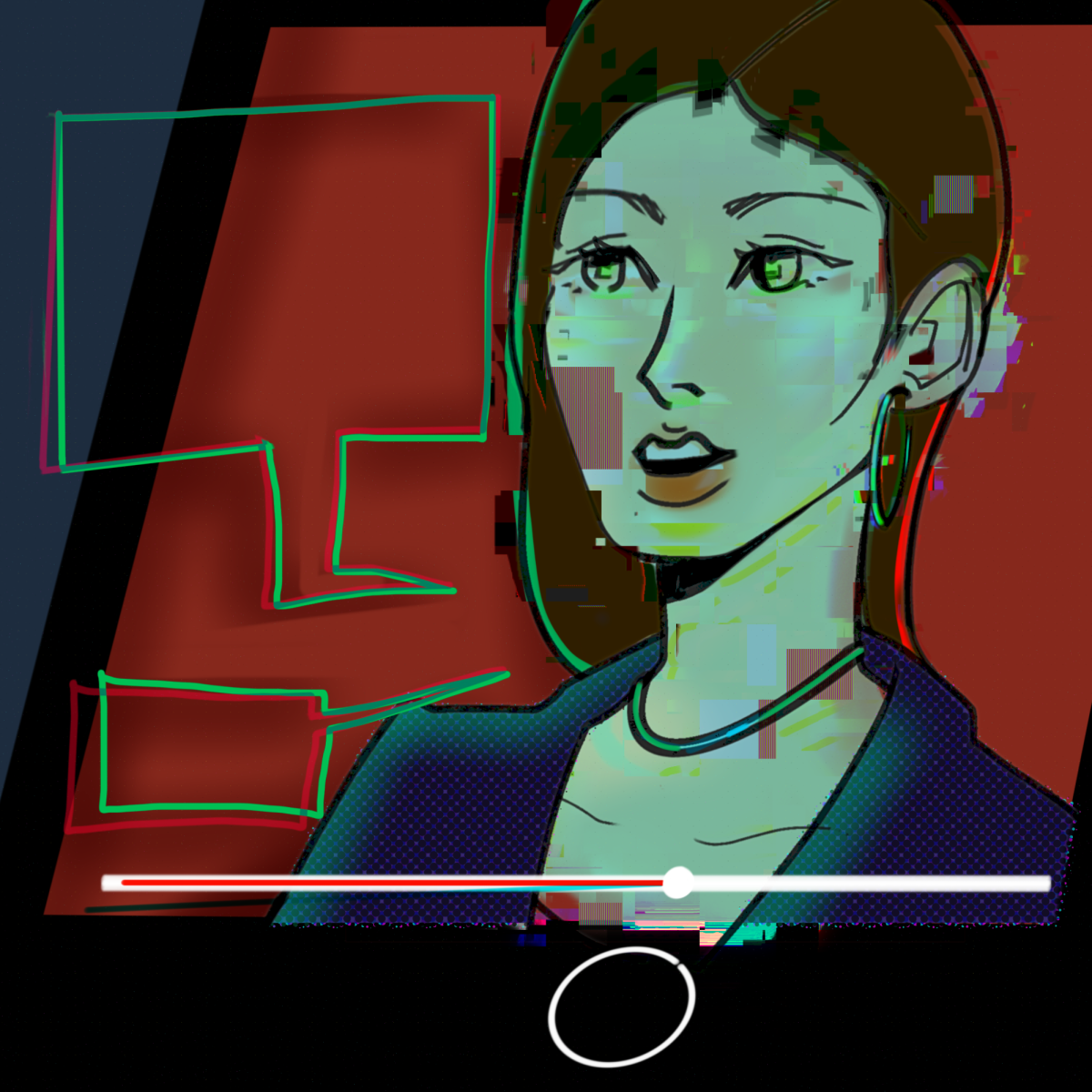

A baby with eyes that sparkle like stars on a clear night, framed by lashes and a button nose is… adorned with the face of Tesla and SpaceX founder Elon Musk? This deepfaked video of Elon Musk’s face on a baby is peak internet comedy, putting even Tesla’s autopilot to shame.

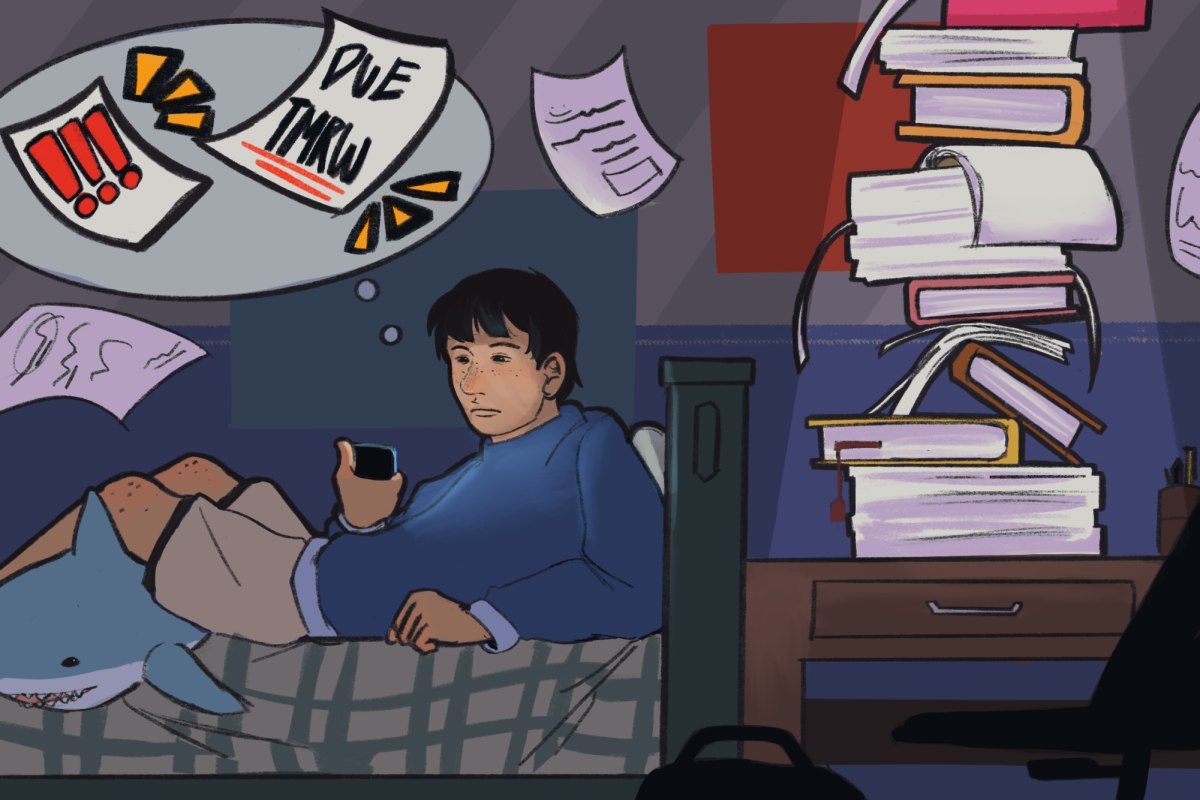

These “deepfakes,” however, aren’t just silly Internet memes. Since anyone can easily use them to spread misinformation, they have the power to deceive mass audiences.

Deepfakes are AI-generated images that use large artificial neural networks of images, videos and audio to impersonate real people. These algorithms are designed to identify and replicate patterns in human features, making these images eerily realistic.

These algorithms are inherently problematic because they pose a significant threat to online security and safety. Deepfakes are often used to steal identities, and those with the ability to manipulate deepfakes can easily tarnish the reputations of their victims or commit fraud.

“Feeding a few videos into an AI model is literally all that’s needed to generate a deepfake of someone,” Machine Learning Club secretary junior Arnav Dhar said. “The worst part is that they can be made of someone completely without their consent.”

A prime example of this is a deepfake TikTok ad claiming content creator MrBeast was offering $2 iPhones in a 10,000 phone giveaway. Within a few hours of the post, TikTok removed the ad and the account.

“Lots of people are getting this deepfake scam ad of me… are social media platforms ready to handle the rise of AI deepfakes?” Mr.Beast said on X in response to the ad. “This is a serious problem.”

Not only is this concerning for celebrities, who could be subject to public scrutiny for completely falsified comments and actions, but this is concerning for all common people as it becomes more challenging to spot potential deepfake manipulation.

Deepfaking platforms have increasingly become easier to access, meaning anyone can deepfake an image of anyone. This magnifies the probability that deepfakes will be used to perpetrate misinformation.

By replicating loved ones’ voices using short audio clips from social media, con artists from Hong Kong exploited AI-powered deepfake technology through WhatsApp messages, impersonating family members or friends to ask for money. Over 24 individuals fell victim to the scam, resulting in more than $800,000 HKD ($10,000 USD) being lost within a week.

To identify a deepfake online, look for things that seem off: jerky movements, shifts in skin tone, unusual blinking (or no blinking at all) and unsynced mouth movement. To protect yourself from audio deepfakes, ask the person on the other end to use a mutual code word during your conversations to verify their authenticity.

At the moment, basic cybersecurity procedures can be efficient at protecting yourself from deepfakes. Deepfakers often target social media for image sources, so it’s important to be cautious with your online posts. Consider sharing photos with parts of your face covered to make deepfaked images more “inaccurate.”

“Deepfakes have become an increasing threat with more of our lives moving online,” CyberPatriots team networking specialist sophomore Tiavani Dante-Evans said. “Security measures are evolving as well to combat these threats, but the impact of AI is still a concern.”