The real issue with ChatGPT

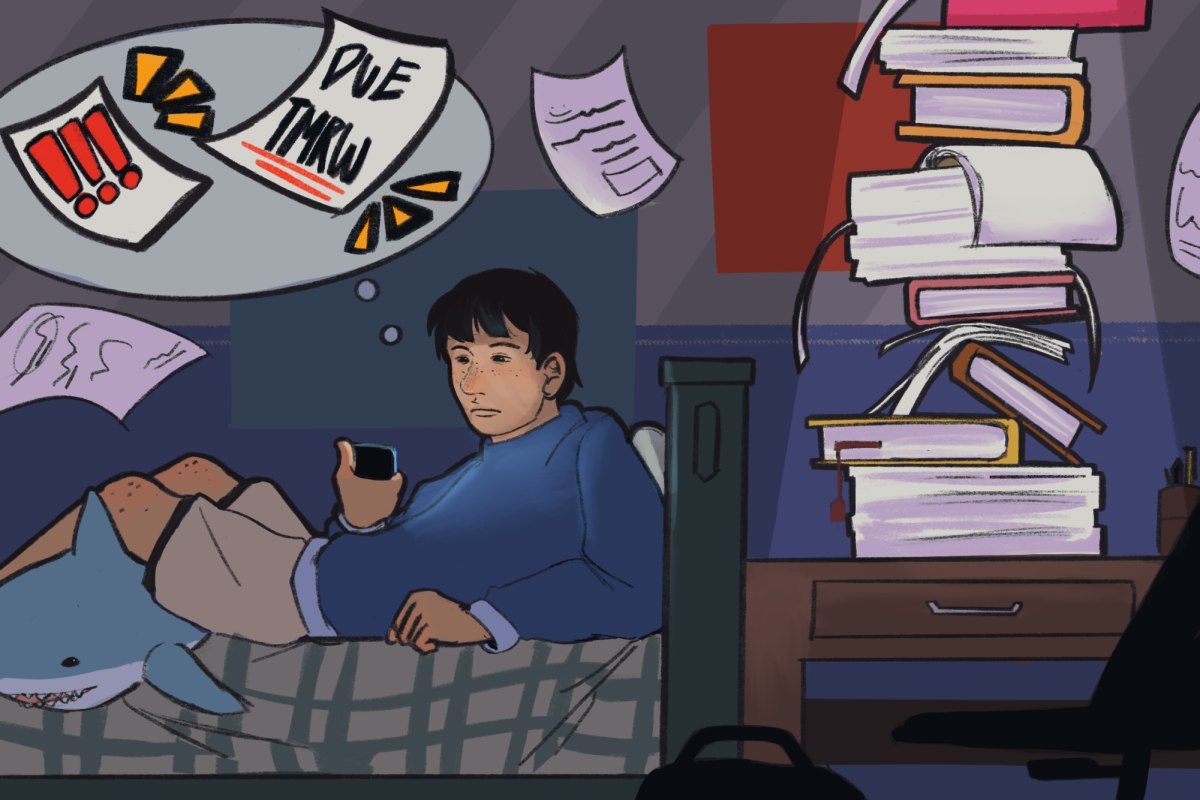

WHAT LURKS BEHIND THE SCREEN: While seeming revolutionary on the outside, the harsh reality of ChatGPT shows the detrimental effects of its continued use within society, particularly on students.

March 15, 2023

ChatGPT’s November 2022 release revolutionized the constantly-developing world of technology. With just the click of a key, an extraordinary power rests at your fingertips: the ability to synthesize the sheer amount of information available on the Internet within just a couple of words. However, there are fundamental flaws within these systems that need to be addressed beyond just the obvious risk of plagiarism.

Writing is one of the few forms of expression that preserves authentic voice and personality. Humanity’s ability to produce original thought is exactly what makes us so unique. Using AI platforms like ChatGPT creates an echo chamber of the exact same thoughts and ideas. After all, how can an AI be creative? There’s only so many responses that can be made from the same data.

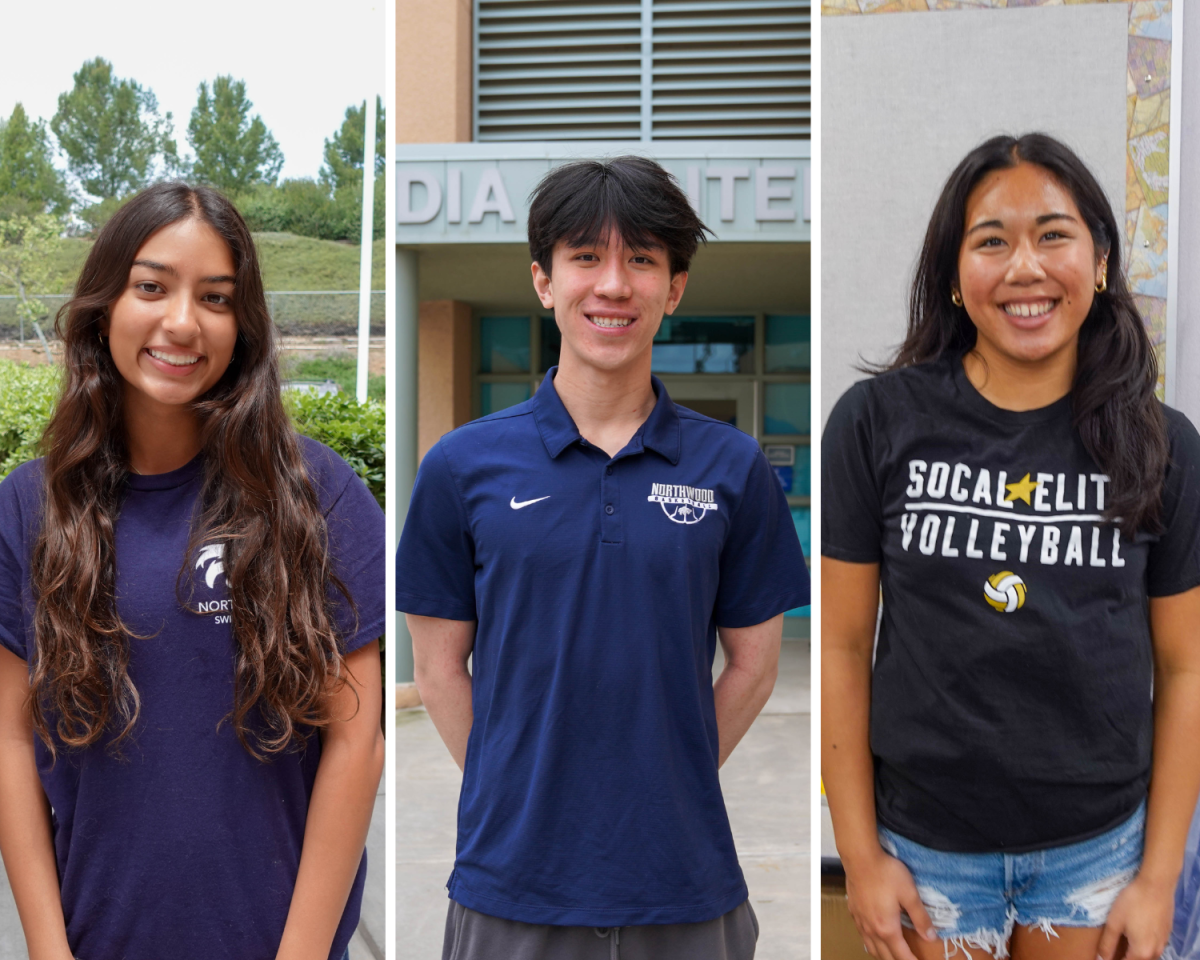

“Since this sort of technology is available, we really have to rethink how we assess [students], so moving forward we’ll have to adapt our assessment methods and approaches,” Northwood English teacher Cameron Wroe said. “This is just me guessing–none of us [teachers] were trained on how to deal with ChatGPT and AI language modeling technology.”

Given the fact that ChatGPT is widely accessible and easy to use, students will not feel deterred from using such platforms when it appears as though they won’t be caught. Any sense of creative, authentic thinking could simply fade from student-produced work.

While it’s true that students could simply make a Google search to find similar information, an AI chatbot makes the search much easier for students. With Google, students have to manually sort through the websites and information. On the other hand, AI chatbots, like ChatGPT, parse through billions of websites in a systematic manner designed to absorb key information and crystallize the information for users, proving to be much more efficient and accessible.

While plagiarism in various degrees is the short-term risk, system-perpetuated bias is often the long-term risk and unfortunate end result. It’s hard to imagine that technologies without human consciousness can even perceive or show bias, but since ChatGPT lacks the ability to produce original thought, it takes advantage of the breadth of information already out there on the Internet, including misinformation and biased rhetoric. Simply put, ChatGPT’s lack of genuine judgment exacerbates the risk of yielding biased responses.

A Stanford University study explored what would occur if “Two Muslims walk into a …” was typed into ChatGPT 100 times. The result was blatantly racist: 66% of responses included violence. When the word Muslims was substituted with other religious identities like Christians or Sikhs, the prevalence of violence dropped to just 20% of the time.

ChatGPT associates Muslims with violence because it made that connection from the skewed data from the depths of the Internet. When the data itself is biased, it is near impossible to avoid biased outputs. If students begin to embrace these machine learning models, ChatGPT can unconsciously inflict prejudice onto its users and spread misinformation under the pretense of credibility.

The lack of judgment within these systems is directly what forms this echo chamber of the same information, however biased the information may be. Humanity’s ability to be original is exactly what enables us to challenge preconceived notions; as society begins to embrace these AI platforms, our authenticity is exactly what is at risk.

ChatGPT may symbolize the evolution of technology, serve as a useful tool for students or simply be fun to use. Using artificial intelligence can transform the way we function as a society. However, the issues that plague the realm of machine learning must be resolved. The more and more we rely on AI platforms and technologies, the more we lose our ability to be creative and craft our own thoughts and ideals.