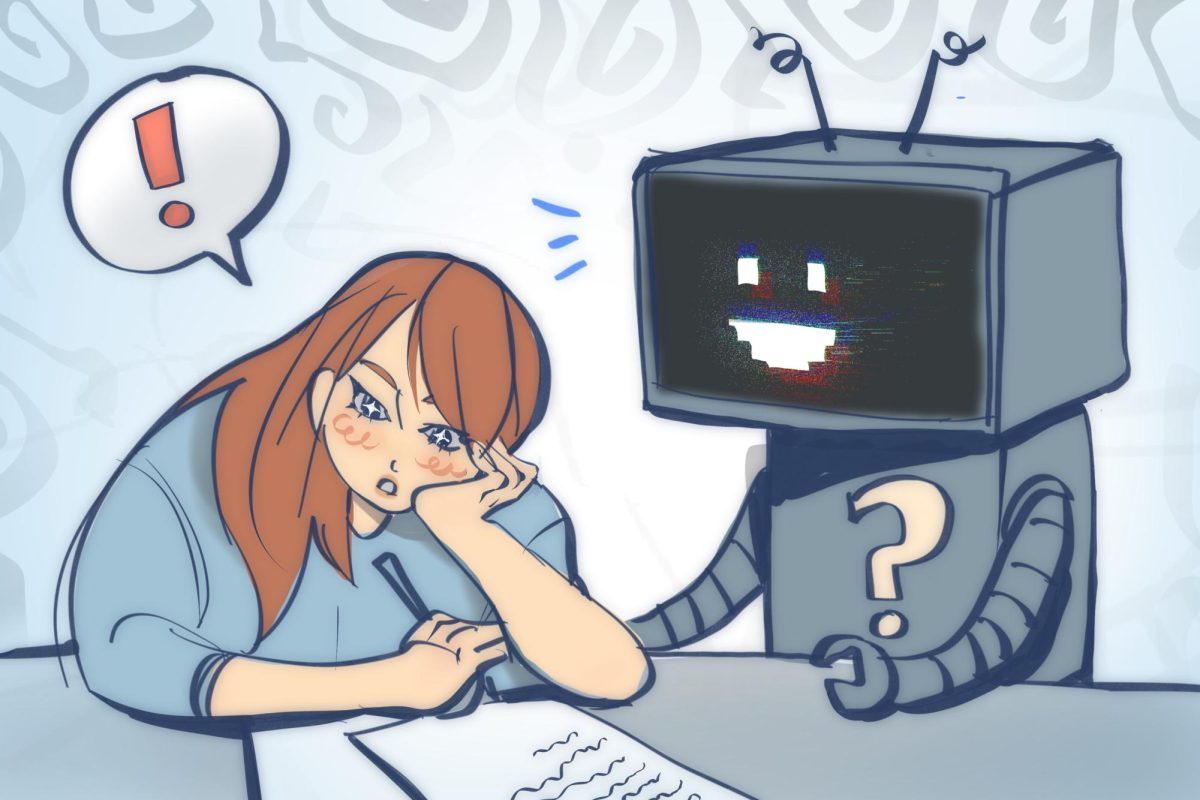

Since artificial intelligence has spread to classrooms, Northwood students have been cautioned that using any AI tool without explicit teacher permission may result in an academic honesty violation under the Timberwolf Code of Conduct.

However, Northwood’s new AI policy should focus on building AI literacy and guiding students toward ethical, effective uses of technology in learning.

In January 2023, New York City public schools blocked access to ChatGPT on all devices and networks owned by the school system. Schools could only ask for access for “technology-related educational purposes,” which meant only to use AI in computer science classes. However, the restriction applied only to school networks and devices. Students could still access ChatGPT on non–Education Department devices or networks, meaning any home internet or personal device.

For many students, bypassing this ban takes less time and effort than completing a tedious assignment, so restricting access to tools like ChatGPT rarely removes the incentive to use them; it simply drives that use underground and makes it harder for administrators to track its use. This is especially tricky when AI detection is hardly foolproof, with false positives happening on Turnitin about 4% of the time.

In addition, 96% of teachers expect AI to be a permanent fixture in classrooms within the next decade. When there is a new innovation that can change how humans live entirely, the right course of action isn’t to avoid it, but to evolve with it. For instance, Connecticut is testing an AI program in seven school districts, offering tutoring systems, feedback tools and staff training so they can guide students in analyzing AI-generated work critically.

The main reason schools are implementing full bans of AI is the fear that over-reliance will erode critical thinking and problem-solving skills. However, when schools such as those in New York ban AI instead of guiding its ethical use, they hand students no practice nor boundaries in utilizing it responsibly. The result isn’t less dependence, it’s dependence without direction.

More importantly, AI has been advancing exponentially in recent years (literally). The time it takes to train large AI models halves about every 8 months, meaning models that once took years to train now finish in months. Technology is moving too fast for schools to rely on blanket bans. Outside of school, AI is already part of professional work in coding, design, research and business.

Some Northwood classes already show how AI is used responsibly. In fact, the College Board’s official policy for AP Computer Science Principles lets students use generative AI to understand coding concepts, assist with development and debug, but emphasizes that students must review, understand and be able to explain any code AI helps generate.

AI can help create a study guide for your midterm, be the tutor who explains a concept in a different way or the practice partner who never gets tired. What it shouldn’t be is the ghostwriter of your essays or the thinker that forms your thoughts.

This new school year, Northwood, along with other IUSD schools, will include department-specific AI usage guidelines in their syllabi, and advisors will lead lessons on responsible AI use at the start of the year. Northwood will also begin implementing Google Gemini into their AI-detecting systems.

“It’s not realistic to just pretend AI doesn’t exist,” principal Eric Keith said. “It is, without a question, going to be a large part of what we do as a school, as an educational system, not just Northwood, but education in general.”

The question isn’t whether AI should be part of our future, it’s whether we’ll use it as a crutch or as a catalyst. In doing so, we will be teaching students not only when it’s ethical to use AI, but also when it is not.